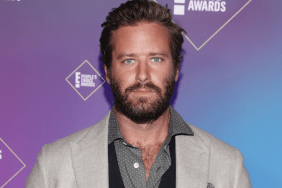

Image Credit: Dave J Hogan / Getty Images

Stephen Hawking held a Reddit Ask Me Anything (AMA) session in order to field questions from users of the website, with the topics of these questions largely centered around artificial intelligence. Such was the popularity of the AMA that it became the third largest in Reddit’s history, with over nine thousands comments being submitted.

The theoretical physicist has spoken out about his fears regarding AI in the past, though Reddit users asked him to elaborate upon his thoughts regarding the likelihood of humanity one day creating AI possessing superintelligence, and the results were an interesting insight into the mind of one of the world’s most brilliant minds.

Take a look through his responses below:

On technological unemployment…

If machines produce everything we need, the outcome will depend on how things are distributed. Everyone can enjoy a life of luxurious leisure if the machine-produced wealth is shared, or most people can end up miserably poor if the machine-owners successfully lobby against wealth redistribution. So far, the trend seems to be toward the second option, with technology driving ever-increasing inequality.

On why artificial intelligence is a threat to humanity…

We need to avoid the temptation to anthropomorphize and assume that AI’s will have the sort of goals that evolved creatures to. An AI that has been designed rather than evolved can in principle have any drives or goals. However, as emphasized by Steve Omohundro, an extremely intelligent future AI will probably develop a drive to survive and acquire more resources as a step toward accomplishing whatever goal it has, because surviving and having more resources will increase its chances of accomplishing that other goal. This can cause problems for humans whose resources get taken away.

On whether AI could become more intelligent than its creator…

It’s clearly possible for a something to acquire higher intelligence than its ancestors: we evolved to be smarter than our ape-like ancestors, and Einstein was smarter than his parents. The line you ask about is where an AI becomes better than humans at AI design, so that it can recursively improve itself without human help. If this happens, we may face an intelligence explosion that ultimately results in machines whose intelligence exceeds ours by more than ours exceeds that of snails.

On whether the risk of creating superintelligent AI has been overblown…

Media often misrepresent what is actually said. The real risk with AI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble. You’re probably not an evil ant-hater who steps on ants out of malice, but if you’re in charge of a hydroelectric green energy project and there’s an anthill in the region to be flooded, too bad for the ants. Let’s not place humanity in the position of those ants.

Image Credit: Dave J Hogan / Getty Images

On his favorite song, movie, and last funny thing he saw online…

“Have I Told You Lately” by Rod Stewart, Jules et Jim, 1962, The Big Bang Theory.

On the one mystery he finds the most intriguing…

Women. My PA reminds me that although I have a PhD in physics women should remain a mystery.

On how he feels humanity should handle the creation of superintelligent AI…

There’s no consensus among AI researchers about how long it will take to build human-level AI and beyond, so please don’t trust anyone who claims to know for sure that it will happen in your lifetime or that it won’t happen in your lifetime. When it eventually does occur, it’s likely to be either the best or worst thing ever to happen to humanity, so there’s huge value in getting it right. We should shift the goal of AI from creating pure undirected artificial intelligence to creating beneficial intelligence. It might take decades to figure out how to do this, so let’s start researching this today rather than the night before the first strong AI is switched on.

On whether AI could be driven to survive and reproduce…

An AI that has been designed rather than evolved can in principle have any drives or goals. However, as emphasized by Steve Omohundro, an extremely intelligent future AI will probably develop a drive to survive and acquire more resources as a step toward accomplishing whatever goal it has, because surviving and having more resources will increase its chances of accomplishing that other goal. This can cause problems for humans whose resources get taken away.

[Via Reddit]